Securing the Internet of Things in a Quantum World

The threat to IoT security posed by quantum computing and how it can be alleviated

Last time out, we discussed the future of Smart Cities – cities characterized by massive amounts of connectivity, where devices and sensors are an omnipresent feature used to streamline traffic flow, solve overcrowding issues, and much more. This is just one example of the power of the Internet of Things (IoT), the name given to the collection of physical devices enhanced by the ability to send and receive data wirelessly. Modern IoT devices are more frequently identified by the “smart” label, such as smart watches – capable of notifying you about incoming texts and emails, as well as monitoring your exercise regime – or smart meters, which enable you to see how much energy you’re using at home and how much your bill will cost you in real time. These devices are an example of very visible IoT devices, since you wear a watch on your wrist and you can see your energy usage tick up on the smart meter’s screen. However, the growth of the IoT will be driven by devices that receive less public attention; compact sensors and monitors across a wide array of industries will allow for more efficient supply chains and product management that was previously too complex to achieve.

Although the development of the IoT will enable a smoother world, one in which it is easier to interact with and understand your surroundings, the rapid development of these devices has come with a cost. Remember, the purpose of these devices is to send and receive data, and we live in a world where data has become increasingly valued; both for businesses, who use data to define their market strategies, and by individuals, who wish to maintain an understandable level of privacy. Since IoT devices are, by definition, connected to the internet, they need to be secured by carefully implemented cryptography that preserves privacy and integrity of the transmitted data. Unfortunately, due to a combination of unique requirements, lack of industry standards, and a desire for profits outweighing the perceived benefits of good security practice, many IoT devices are inadequately protected from malicious interference, or worse not protected at all.

Security in the IoT

Given that internet security is not exactly a novel idea, an obvious question arises: if IoT devices are capable of connectivity, why can’t we protect them the same way we protect everything else? To answer this question, we have to understand the unique properties that define IoT devices and why they preclude standard security techniques. By definition, the IoT is a broad umbrella of devices, so not every device will have the same features – your smart phone has more in common with your laptop than it does with a smart fridge – but it is still helpful to consider commonalities of most IoT devices to demonstrate what drives the complexity of protecting them. Let’s take a quick look at some of the key issues facing IoT security practitioners today, and consider why they’re proving hard to mitigate.

Constrained Devices

The number one feature of IoT devices that makes them hard to secure is an inherent part of their nature; that they’re typically small, low-powered devices which are each designed to fulfil a very specific purpose. In order to keep them compact and focused on their designated task, compromises must be made in their hardware and thus their computational capabilities. Although not true of all devices – again, your smartphone is perfectly capable of streaming HD content and is not exactly a low-powered device – the majority of devices in the IoT are known as “constrained”, meaning that they lack certain functionalities common to larger, more powerful, computers. What exactly does it mean for a device to be constrained? Much like the IoT, this is a catch-all term intended to encompass a lot of ideas, but most common constraints can be put into one of two categories:

-

Power: an IoT device only needs enough computing power to perform its task. For example, a smart chip inside a fridge that measures the internal temperature only needs to be able to read its thermometer and transmit the corresponding data. It will most likely lack the power to perform expensive operations, since it has no need for them.

-

Memory: a device whose purpose is to transmit real-time data may not have much memory, since if it is transmitting the data to a server somewhere then it is probably easier to store the data there. What little memory the device has may already be in use, for example so that the device can remember the location it needs to send the information to.

These constraints are a huge complication for security; robust cryptography is not cheap to perform, and typically requires storage of temporary or permanent keys.

One elegant solution for key management is to have hardware-driven device identities, either to derive keys or as a replacement. These can be understood like a digital version of a fingerprint: manufacturing processes are pretty consistent these days, but zoom in to a sufficiently microscopic level and you will find different devices from the same production line have small but detectable variations. These differences can be used to identify devices in the same way fingerprints, and other biometric IDs, are used to identify humans; each device has a unique fingerprint-like pattern in its hardware, and it is the only device able to present it. A particularly appealing feature of these IDs is that they circumvent the need to store keys. Since the fingerprint is unique to the device and the device can read its fingerprint – essentially accessing its keys – from hardware, it is free to save its memory for performing its intended task.

Servers and Denial of Service

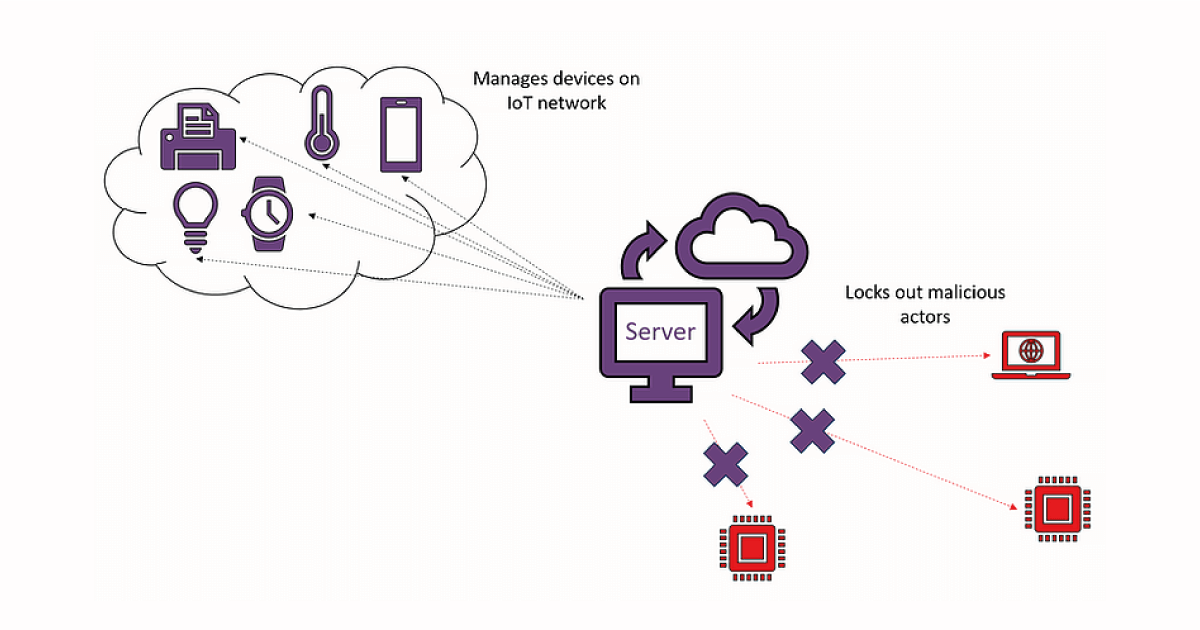

Since IoT devices are constrained and characterised by frequent transmission of information, a natural idea is to move as much computation as possible “server-side”. The information gathered has to be sent somewhere – let’s call it a server for now – that it can be used and understood, and this server will typically be much more powerful than the devices themselves. Thus, IoT networks rely on this central location to do the heavy lifting, managing the data sent by all the devices and shouldering as much of the network security burden as possible.

In order to facilitate this, devices and servers need to establish near-constant communications that are both reliable and private. Privacy is just a matter of well-managed encryption, so for now let’s focus on reliability, and in particular authenticity: how does the device know that it is sending its information to the correct server, and in turn how does the server know the device is genuine rather than a fake – perhaps a nefarious individual has tried to get free energy by incapacitating their smart meter and installing a fake that reports to the energy company that no energy is consumed in this house. To prevent attacks like this, an IoT network needs some system in place to guarantee members are who they say they are, typically in the form of digital signatures and public key infrastructure.

As well as preventing fake devices, signatures can be used to protect against denial-of-service (DoS) attacks. Unlike cracking encryptions or faking signatures, attacks that are typically used to steal or implant fake information, DoS attacks are a simpler mechanism with a simpler aim: to prevent devices from performing their duties. This is usually achieved by disting device-server channels, either by creating a fake server to intercept signals sent by the device or by overloading the server by issuing requests with fake devices. Since IoT networks contain large quantities of devices connecting to centralized servers, they are particularly vulnerable to DoS style attacks.

Physical Security

An often overlooked aspect of securing the IoT is handling the fact that devices are deployed in all sorts of places, and as a consequence care must be taken to manage the physical aspects of their security. Unlike someone trying to break encrypted messages you’re sending over the internet on WhatsApp, someone trying to compromise your IoT security might be able to get physical access to a device, and so perform specialized attacks using its hardware. For a non-cryptographic example, imagine someone wanted to attack a network of sensors used to detect smoke in a factory. If they wanted to fake a fire, all they need do is hold a lit match up to a detector! So the sensors must be deployed carefully to prevent this possibility – perhaps they can be put high up on the ceiling so that a person could not reach them.

In cryptography, physical attacks tend to fall in to the category of side-channel attacks, which take advantage of how an algorithm is performed as opposed to breaching the underlying mathematics. After all, it is often considerably easier to verify that the maths is secure than it is to confirm that the code running it works exactly as intended. The archetypal side-channel attack is that of a timing attack: imagine your cryptographic algorithm requires that a device generates a random secret key for use in encryption. What happens if the algorithm that generates the key, let’s call it KeyGen, doesn’t always take the same amount of time to run? Then an attacker who has physical access to the device and can detect how long it took to run KeyGen might be able to learn something about the key output.

As a toy model, say KeyGen should output a random key that is a string of 5 bits, each equally likely to be 0 or 1. Mathematically this is very simple, but in practice how should you tell a computer to make KeyGen work? A naïve but not unreasonable idea is to start with the string 00000, then get the computer to flip a coin: if it turns up heads change the first bit to 1, and if not leave it fixed; then, repeat this process for each of the 5 bits. The output of this process is certainly a random string of length 5. However, the process of updating a bit from 0 to 1 may take the device a tiny bit of extra time, just enough for an attacker who can access the device to detect. Now the attacker can learn some of the key output by KeyGen: they’re able to detect how many bits of the key were flipped, and in some cases can even recover the whole key and steal any information encrypted under it.

Although the attack in the previous paragraph is pretty easy to prevent – just implement KeyGen in some way where it always takes the same amount of time – it illustrates a valuable point, that you must be careful with IoT devices to make sure that they do not physically leak information to attackers. Other kinds of information that may be of use to attackers includes power consumption – maybe if a key is a larger number it takes the device noticeably more energy to perform encryption than if it were small – and even fault injection, where an attacker is able to corrupt parts of memory using physical access to a device, perhaps forcing the device to forget its keys or worse “remember” a maliciously chosen key.

Industrial Fragmentation and Completeness of Vision

Alongside the cryptographic issues, the industry surrounding IoT devices also complicates their security. Essentially, the issue is one of fragmentation: the industry has too many players fulfilling distinct roles, and there is no one entity whose job is to ensure the security of device from start, when the device hardware and chips are built, to finish, where the device is deployed and transmitting data. That is, completeness of vision – top-down management of all issues relating to security – is too often overlooked on the IoT.

To understand the need for complete management, consider the different businesses involved in deploying an IoT device, and what aspects of security they are each responsible for. Firstly, there’s the silicon vendor that builds the chips which make IoT devices “smart”, who must manage what information needs to be embedded on chips to allow them to find and access the appropriate IoT network. Next up, the device manufacturer who ordered the chips; they’re responsible for providing the physical device and the firmware to be run on the chips. This means they have some responsibility in ensuring that the chip handles more classical cryptographic concepts such as maintaining secure connections with the network. Finally, an IoT hub provider sells software to the network owner which allows them to manage information– a central location where they can collate and parse data obtained by the devices. This provider may be responsible for more classical aspects of network security, such as authentication and preventing DoS attacks.

This simple model of an IoT supply chain has a clear management issue, since there is no party whose duty is to ensure compatibility of all stages – perhaps the device manufacturer expects the IoT hub provider to check the authenticity of connecting devices, but the hub provider is only focused on providing user privacy; now, a malicious actor can enroll their device in the network as no party has taken steps to prevent this. For this reason security duties are often outsourced to third-party specialists, but even then one must be careful to understand the scope of the task given – a security company contracted to manage security and authenticity of wireless IoT hub communications, for example, does not have control over mitigating physical attacks.

PQC for the IoT

Despite its increasing omnipresence, the IoT is still an emerging technology. So, when considering how best to secure the IoT it is prudent to consider both current and future security standards. As discussed in our previous blog on Post-Quantum Cryptography, the security of the future must be resistant to attacks by both classical and quantum devices. Since PQC and IoT security are both swiftly moving fields, it can feel like neither has time to consider the other; PQC schemes are focused on protecting critical information against quantum attacks as soon as possible, and IoT devices are not designed with any of the new requirements of PQC in mind. Nonetheless, it is important to think about how the IoT can be secured using PQC, since it feels inevitable that both the number of connected devices and the power of quantum computers will continue to rise dramatically.

Ask an experienced cryptographer about IoT security in a quantum era and the first topic they will bring up will likely be that of key sizes and digital signatures. Since IoT devices have constraints on both memory and power they can have problems handling large keys or sending and receiving large messages. Additionally, signatures are extremely important for IoT devices, as they allow parties in the network to verify that everyone is authentic. The other two operations required in a standard TLS connection, which is how devices communicate to servers, are key establishment and symmetric cryptography. Even in a quantum era these should be more manageable for IoT to perform, so much of the focus on IoT security is on signatures.

Comparing the digital signature candidates remaining in the NIST process with ECDSA, a standard pre-quantum signature scheme, reveals the scope of the problem: to transmit a signature at 128 bits of security, ECDSA requires sending a public key of length 256 bits and a signature of around 576 bits. Contrast this with the most compact remaining NIST candidate, Falcon, at its lowest level of security: a public key requires 896 bytes and the signature 690 bytes. All-in-all, the Falcon transmission requires around 15 times more bandwidth than the ECDSA version, as well as more expensive computations and more use of memory to store keys. It seems unlikely that Falcon, or any other lattice candidate, will be able to squash their key sizes much more than they already have, so for the IoT a more radical solution might be necessary. SQISign is a novel isogeny based scheme requiring bandwidth more similar to that of ECDSA, so if it survives a thorough security analysis then it could well be a savior for the IoT. Otherwise, devices might have to rely on methods other than signatures to authenticate to servers, such as a heavier reliance on KEM’s or pre-shared keys.

In terms of physical security, credit should be given to NIST, who have made it clear that 3rd round candidates should have implementations that bear side-channel attacks in mind. PQC schemes, and lattice-based ones in particular, tend to rely heavily on internal randomness and sampling from “error” distributions. When deploying these schemes in the IoT, it is important to make sure that sampling this randomness happens in constant-time, so that an attacker with physical access to the device can’t infer their output from how long they took. More generally, physical security such as fault tolerance or power attacks also need to be considered when designing PQC schemes that might be deployed on the IoT, further complicating an already difficult process.

Outlook

Both IoT security and PQC are rapidly advancing fields, and it would be beneficial for them to be considered together, since in the end they will heavily impact each other. IoT security practitioners need to remain aware of ongoing standardisation processes, such as that of NIST, to understand best practice of PQC security. On the other hand, these processes are larger in scope than just the IoT, and both parties must take care to understand exactly how compatible the results of these processes are with the specific needs of the IoT. Fortunately, it seems that NIST is cognizant of these issues, since they take care to emphasise both the importance of side-channel attacks and the issues relating to bandwidth requirements of post-quantum signatures. However, there is still a great deal of work to be done, since available signature schemes are too unwieldy for use in practice. Furthermore, since both IoT hardware and PQC schemes are still evolving, there is plenty of scope for unseen complications to arise along the way.

Additional resources

Whitepaper

Enabling Cloud Connectivity in Resource-Constrained IoT Devices: A Wi-Fi Module and Low-End MCU Approach

This paper explores an affordable and efficient approach to embedded device cloud connectivity using a Wi-Fi module with a low-end microcontroller unit…

Read more

Whitepaper

Hardware Root of Trust: QDID PUF & Attopsemi OTP

This whitepaper presents a simplified, secure, and ready-to-use root-of-trust solution for embedded devices by integrating Attopsemi’s I-fuse technology…

Read more

Whitepaper

QuarkLink for Industrial PCs

This white paper introduces how Crypto Quantique’s QuarkLink SaaS device security platform is used with industrial PCs running Linux.

Read more